SEARCH

Impact Stories

FIWARE Context Broker: The engine for future energy systems

In December 2019, the EC launched the European Green Deal, designing the path for Europe to become the first climate-neutral co...

Opportunities

Designing the Context Broker’s Data Visualisation Dashboard

Take part in the online workshop and help the CEF Context Broker team to design the Context Broker’s data visualisation dashboard.

Opportunities

Cultivate and Leverage the Context Broker Connecting Europe Facility Bui...

Join the online courses for (aspiring) ICT operators, to improve your technical skills and knowledge of the Context Broker.

NEWS

Join the Official Launch of the Context Broker as a CEF Building Block

Join us and discover how the new CEF Building Blocks will help build the next generation of European digital public services.

Ecosystem

Opportunities to Learn More about the Context Broker as a CEF Building B...

Give your organisation the ability to analyse, share and use data in real time at the right time, with the CEF Context Broker.

Tech

Learn More About The Context Broker as a CEF Building Block During the E...

Join us during the European Week of Regions and Cities to learn more about the Context Broker as a CEF Building Block.

Tech

FIWARE Context Broker Launches as a CEF Building Block

The Connecting Europe Facility (CEF) Programme went live with the first release of the Context Broker Building Block.

Tech

HackathonGi 2018 Makes Use of the FIWARE Orion Context Broker

This year, the Girona Hacketon 2018 introduced its attendees to the FIWARE Orion Context Broker.

Opportunities

IoT Tutorial – Orion Context Broker & Arduino

By Telefónica R&D Chile. This tutorial will be divided into parts for easier reading and practical purposes. 1. Introducti...

Tech

CORS Support for Orion Context Broker

The latest release of Orion Context Broker (0.22) includes CORS support for GET requests. What that means in practice? It means...

Ecosystem

Orion Context Broker: introduction to context management (I)

The following post has been written by Fermín Galán Márquez, part of the FIWARE technical team in Telefoni...

Tech

Attend our FIWARE Webinars: FIWARE LAB Cloud and Blueprint Capabilities ...

Today, January 22nd, we will have two webinars open to anyone who would like to learn about how to use FIWARE LAB's Cl...

Tech

Security and Core Context: New Patch Release of the FIWARE Catalogue

The FIWARE Catalogue continues to grow with even more open-source components being added to help the development of your own Sm...

Tech

OPC UA IoT Agent and Facility Enabler: Bringing Manufacturing Data into ...

The Data Economy will revolutionise the Manufacturing Industry. This risks to sharpen the gap between large enterprises and SMEs.

NEWS

ETSI Releases Preliminary Specification of Context Information Managemen...

Group Specification CIM 004 defines a simple way to update or query context data within a Smart Application.

Tech

The FIWARE 8.5.1 Release is Now Live

FIWARE has released updated versions of all NGSI-LD context brokers: Stellio 2.19.0, Scorpio 5.0.6, and Orion-LD 1.8.0.

NEWS

FIWARE Catalogue 8.4.1 is out!

The latest release includes updates to all NGSI-LD context brokers (Stellio, Orion-LD and Scorpio)

Global Summit

RASEEL: Revolutionising the City Digital Economy

RASEEL acts as a context broker, managing contextual information generated by IoT devices and sensors and any city information ...

Ecosystem

CEF Introduces Three New Building Blocks: Discover Their High Value for ...

On 7 December 2018, CEF introduced three new Building Blocks: Big Data Test Infrastructure (BDTI), Context Broker and eArchiving.

Ecosystem

Driving Urban Transformation Through High Value Datasets: BeOpen Project...

BeOpen demonstrates the power of data-driven decision-making through pilots that enhance collaboration, improve data accessibil...

Ecosystem

Empowering Cross-Sector Innovation at the Edge: The COP-PILOT Project

The COP-PILOT project addresses a pressing need: transforming fragmented infrastructure and siloed services into a unified, int...

Ecosystem

Introducing CARTIFactory: Empowering Industrial Interoperability through...

CARTIFactory: Interoperability is key to Industry 5.0. Learn how FIWARE enables smarter, connected, scalable industrial solutions.

Ecosystem

Celebrating the Highlights of 2024

As 2024 comes to a close, we reflect on a year filled with amazing achievements, valuable partnerships, and the unwavering supp...

Impact Stories

IoT AGENT OPC UA

i4Q - empowering industries with reliable data-driven decision-making capabilities

Impact Stories

Noise monitoring: Remarkably less nighttime noise in Leuven’s smart city

In Leuven the nightlife center is situated in the heart of the city, this results in disruptive nighttime noise for the residen...

Impact Stories

CAPRI Platform & the PySpark Connector: a bridge between FIWARE and...

The European co-funded CAPRI project brings cognitive solutions to the process industry by developing and testing an innovative...

Impact Stories

Dr. Peset Valencia, a smart and connected digital hospital

Dr. Peset Hospital of Valencia has deployed its Smart Hospital platform based on FIWARE Open Source approach and technologies.

Impact Stories

CollMi: Technology for a more trustable and sustainable logistics value ...

The CollMi experiment addresses two common issues: the absence of a unified digital communication mechanism and the lack of tru...

NEWS

FIWARE 8.4 has been released

The FIWARE 8.4 patch releases a large number of new security components relevant to data spaces, and new data connectors.

Impact Stories

Smart Street Lighting solution powered by FIWARE: deployment in Sicily

The Municity solution developed by Municipia aims to share the experience of the RUACH Consortium comprised of 40 municipalitie...

Global Summit

How ms.GIS ensures best water quality for Smart Cities

Marcus Scheiber, Founder and Co-CEO, ms.Gis explains how they develop Smart Water and Smart Cities with the help of the right s...

Impact Stories

AgriSpace4Trust optimises energy inputs in olive production

The AgriSpace4Trust aims to create data hubs that utilise local weather stations or agro-environmental sensors accessible to a ...

Impact Stories

CO2-Mute: Fighting CO2 emission with data space

The CO2-Mute aims to support local governments in their efforts to deploy policies for sustainable mobility and urban green inf...

Impact Stories

eVine2Wine: Sharing vineyard data with the wine value chain

eVine2Wine was developed to enable wine traceability of products coming from small wine producers that act in multi-actor wine ...

Impact Stories

BARET Smart Destination Solution

A flexible and modular application aimed at collecting, processing, and comparing large volumes of data from an immense variety...

Impact Stories

eXtended Digital Twin for Smart Building

XDT aims to improve energy efficiency, it defines a holistic view of the building on: sustainability of logistics, energy consu...

NEWS

Enershare project received 8 million euros to develop the first Common E...

The Enershare project will develop and deploy a unique and first of its kind Common European Energy Data Space.

Impact Stories

La Palma Smart Island: Monitoring a volcanic eruption

Thanks to FIWARE, the Authority of La Palma has access to real-time data informing inhabitants on air quality risk and uncertai...

NEWS

FIWARE Foundation and AWS join forces to develop digital cities

Amazon Web Services has joined FIWARE Foundation as a Platinum Member.

Smart Cities

How the Smart Territory Framework helps territories create smart and sus...

AWS and FIWARE develop digital cities and make them sustainable, liveable and inclusive for everyone by implementing the Smart ...

NEWS

CEFAT4Cities announces six winning projects of European Virtual Hackathon

The leaders of the winning projects will be awarded in a dedicated ceremony on 15 September at the FIWARE Global Summit in Gra...

NEWS

FIWARE Foundation and Alastria Association demonstrate strong market imp...

Pioneer use cases for the Public Administration and Agrifood sector now available integrating Blockchain.

Impact Stories

Molina de Segura: Smart City platform and IoT network with FIWARE

The platform integrates smart services of Molina de Segura based on contextual municipality information to support decision mak...

Impact Stories

Innovative Mind Mapping System Connecting to Smart City IoT Networks

The CEFAT4Cities project has created a processing pipeline that ingests public services in multiple EU languages.

Impact Stories

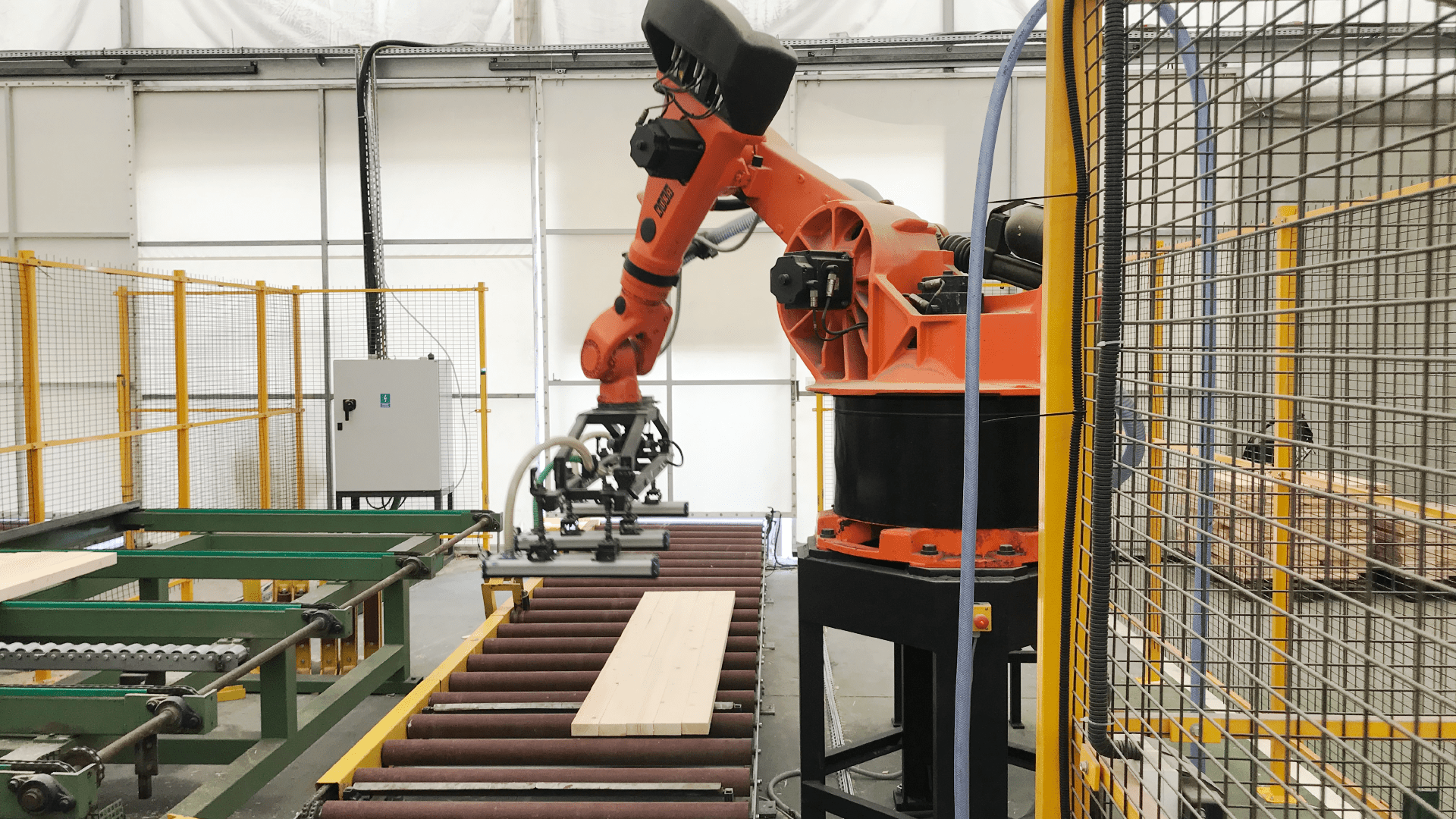

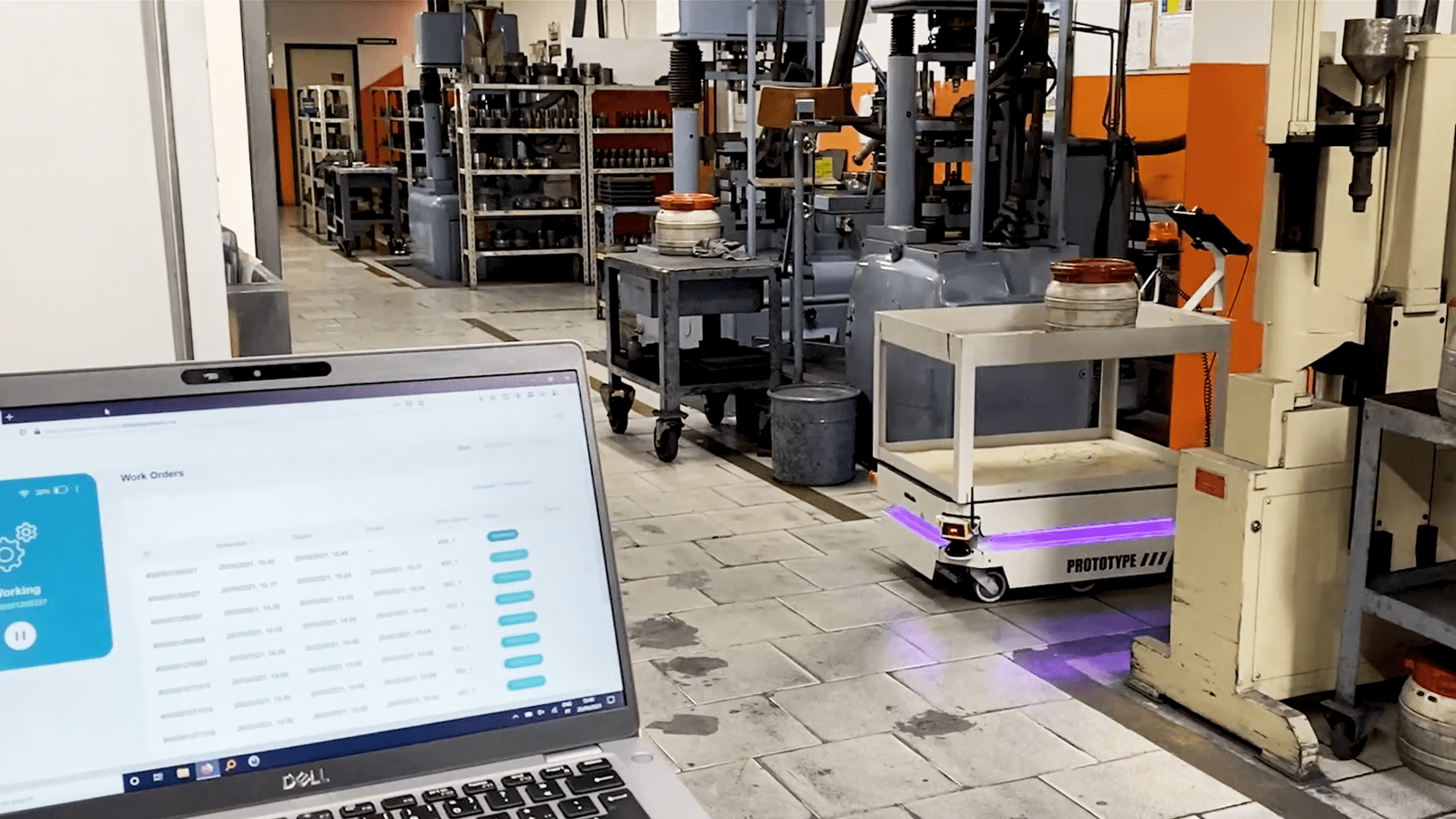

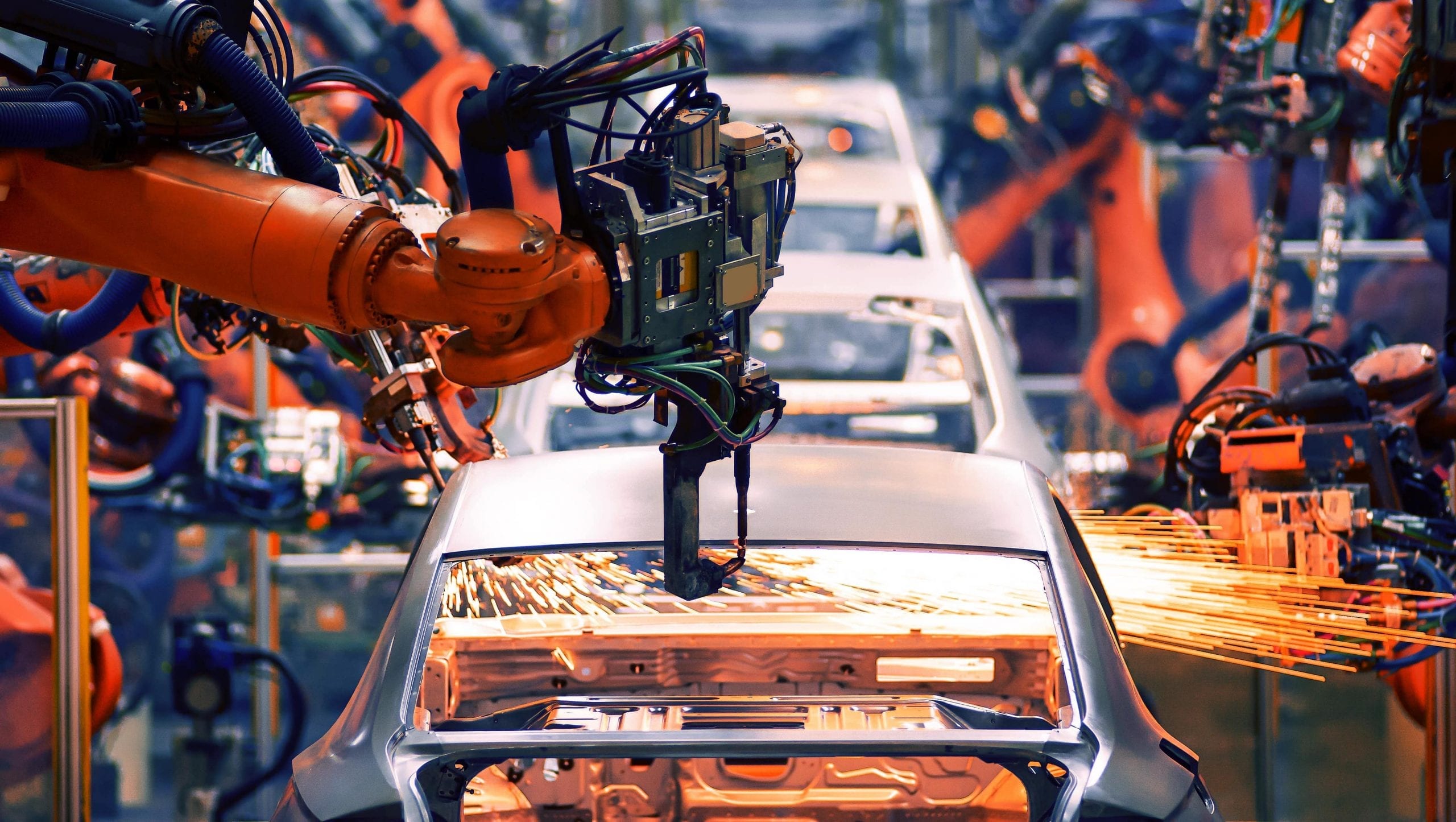

Novel IoT agent using FIWARE in automated Smart Factory solution

Using FIWARE enablers, CONTRA system offers an added value to the manufacturing company.

NEWS

FIWARE Foundation and OASC Strengthen Ties to Drive Open Standards and I...

FIWARE and OASC enter into a new collaborative agreement to continue strategic partnership

Impact Stories

Orchestra Cities, a one-stop-shop multi level IoT platform for cities

EKZ delivers an integrated solution to its municipalities, combining direct sensor data, information shared by nearby cities, a...

Impact Stories

Revolutionary AI Air Pollution System powered by FIWARE

Breeze Technologies believes that distributed networks of small-scale environmental sensors can enable an entirely-new approach...

Impact Stories

Perugia’s digital transition to Smart City powered by FIWARE technology

The city of Perugia is choosing WiseTown with its enabling infrastructure for Smart Cities to help innovate and create value fr...

Impact Stories

Digitalization in Saint-Quentin’s stadiums: City-as-a-Platform Concept –...

FIWARE allowed the city of Saint-Quentin to meet all the technical requirements and constraints to make its solution fully func...

Impact Stories

FIWARE supports Smart City beach surveillance in Montevideo

By integrating cutting-edge FIWARE technology and open data, a unified system has been created for beach visitors to access rea...

Ecosystem

GreenMov project proposes sustainable mobility and open data for smart e...

GreenMov project proposes sustainable mobility and open data for smart ecosystems aligned to the EU Green Deal.

Impact Stories

Open Source Hospital Intelligent Automation Server

Asociación Peter Moss identified a use-case for an Open Source server capable of deploying and managing a wide range of Open So...

Impact Stories

Innovative Fleet sharing solution created using AI powered by FIWARE

A solution which digitises fleet management and permits sharing of vehicles among employees, whether for transportation of good...

Impact Stories

Eridanis: A startup growth story enabled by FIWARE

Starting up a tech company these days is far from simple, unless one can leverage advanced technologies giving a kick start to ...

Impact Stories

Autonomous Manufacturing for Custom Designed Products using FIWARE

The FASTEN project aims to provide an open, modular, integrated, and standardized IoT framework to produce and deliver tailor-d...

Impact Stories

FEATS – FIWARE Enabled Autonomous Transport System

Warehousing and logistics companies are increasingly looking to innovative robotics solutions for the transportation of raw mat...

Impact Stories

Algeciras promotes stargazing using real-time monitoring of the night sky

Algeciras set itself the challenge of using technology to reduce the environmental impact produced by the municipality’s public...

Impact Stories

Innovative eHealth device for air quality using FIWARE

WOBCOM’s WOB.smart service facilitates proper ventilation by automatically measuring CO2 levels via sensors in everyday situati...

Impact Stories

Snap4City: FIWARE powered smart app builder for sentient cities

Snap4City is a 100% open-source platform has at present a wide range of activities in the smart city and IoT/IoE integrated dom...

Impact Stories

Smart Irrigation System implemented in Cartagena’s city

The Cartagena City Council has led the digital transformation of the city, incorporating new technologies to improve the manage...

Impact Stories

A/RporTWIN: FIWARE powered AR tool created for airport operations

A/RporTWIN is the next digital twin powered by the FIWARE platform concerning the management of various infrastructures.

Impact Stories

FIWARE integrated IoT platform managing product life cycle

N-Smart provides a modular, flexible, and scalable platform providing a single pane view for an end-to-end IoT solution.

Impact Stories

A Powered-by-FIWARE solution to personalise citizens’ urban experience

The Powered by FIWARE solution provided by Málaga convert the instant data into useful information to drive smart decisions of ...

Impact Stories

Ubiwhere: a single, integrated view of Smart Cities

Ubiwhere aiming at providing city service providers, with innovative technology to enhance their daily operations and processes.

NEWS

FIWARE, iSHARE and FundingBox Launch the i4Trust Initiative That Will Mo...

i4Trust will contribute towards building the foundation for Data Spaces, thereby facilitating trustworthy and effective data sh...

Impact Stories

SARA IoT platform: designed to operate with maximum efficiency

SARA is a FIWARE-based IoT platform designed to fit in industrial environments and operate with maximum efficiency.

Impact Stories

Elliot Cloud is helping São Paulo to manage its water resources network

It is well known that water management is a priority for our societies. This is where Elliot Cloud played a key role.

Impact Stories

Collaborating municipalities for disaster resiliency and sustainable growth

Disaster resilience is a common challenge in many Japanese municipalities. Saving the lives of citizens is the top priority mis...

NEWS

FIWARE Foundation and the IUDX Program collaborate on Open Source to pro...

FIWARE and the IUDX Program collaborate to build an Open Source platform that facilitates secure, authenticated, and managed ex...

Impact Stories

Helping improve air quality for thousands of athletes training in urban ...

Air QualityIoTOpen DataSmart CitiesSustainability

Impact Stories

Digitalization in Saint-Quentin’s stadiums: City-as-a-Platform Concept –...

FIWARE allowed the city of Saint-Quentin to meet all the technical requirements and constraints to make its solution fully func...

Ecosystem

Wisdom Across the Board: Antonio Jara

This week, we are pleased to sit down with Antonio Jara, a BoD member since May 2019, and the CEO of HOPU.

Impact Stories

City solutions adaptable, flexible and low cost

Smart CitiesSmart EnergySmart IndustrySmart Mobility

Impact Stories

IoT, AI and Blockchain based platform improving livestock Farming

Digitanimal aims at increasing farm productivity, and animal welfare with a solution for beef cattle based on a “powered by FIW...

NEWS

Interoperability of FIWARE and GS1 Standards Boosts Innovation in the Io...

The OLIOT mediation gateway (OLIOT-MG), developed by KAIST, has become an integral part of the “FIWARE Catalogue“.

Impact Stories

Cycle routes and air quality monitoring with real citizen’s engagement

The development and maintenance of a healthy living environment are of crucial importance for the health and well-being of the ...

Impact Stories

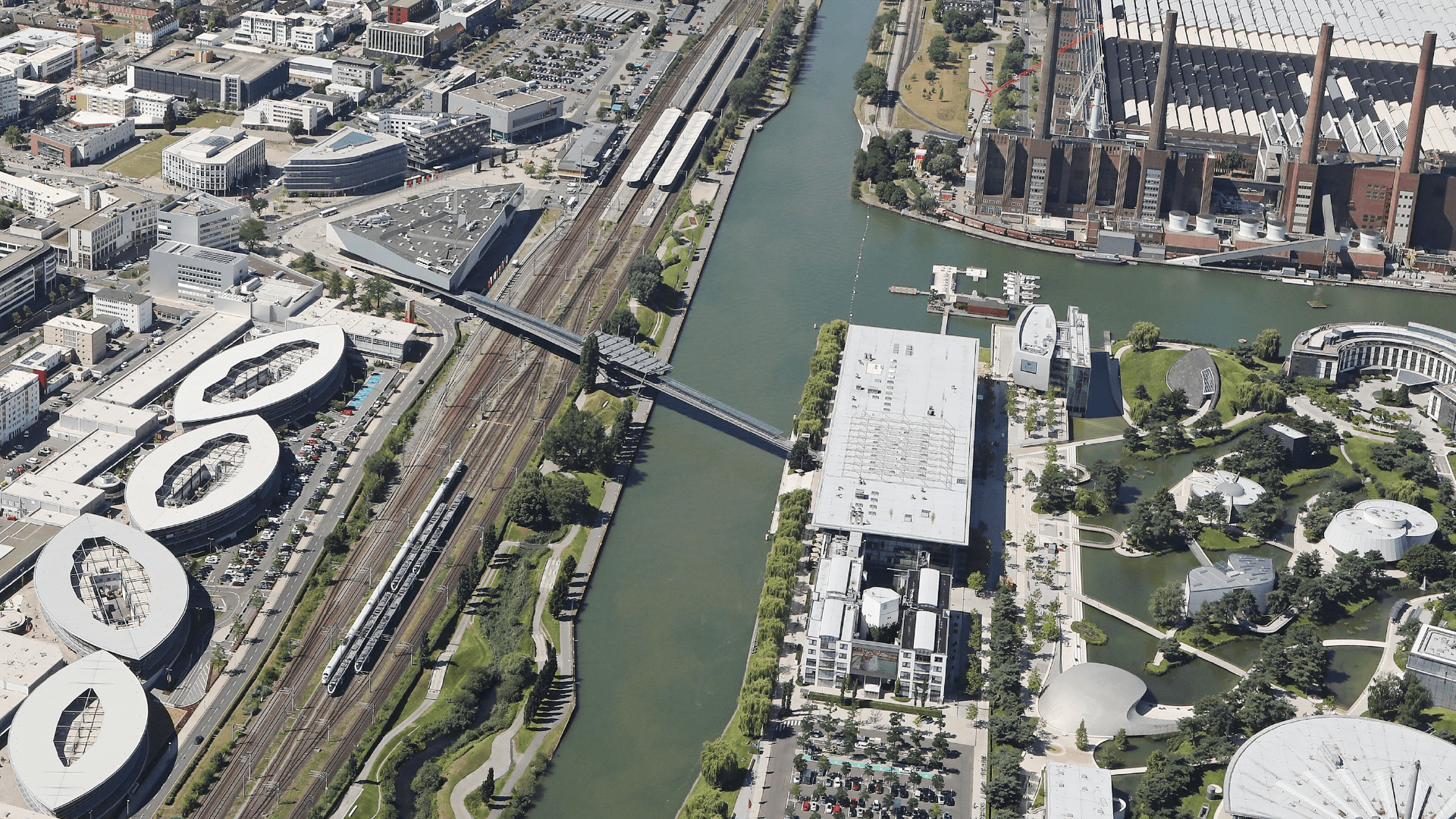

Smart City Lab in Hamburg: FIWARE Open Source services and Data Platform

Engineering GmbH provides for Hamburg, a digital pioneer City in Germany, an OpenSource services and Data Platform based on FIW...

NEWS

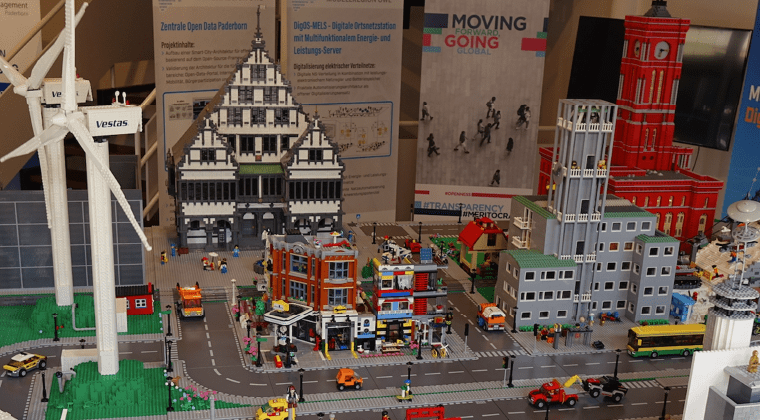

Paderborn bietet anderen Städten FIWARE basierte Open-Source-Lösungen an...

Als digitale Referenz in Deutschland treibt die Stadt Paderborn ihre digitale Transformation mit FIWARE-Technologien weiter voran.

NEWS

Paderborn offers FIWARE Open Source solutions to other cities and hosts ...

A digital reference within Germany, the city of Paderborn is further driving its digital transformation with FIWARE technologies.

Impact Stories

Container and Shipment tracking System in India

Global trade creates big opportunities and bigger challenges. A streamlined supply chain is vital for a smooth flow of goods.

Impact Stories

Safe and comfortable spaces for people and businesses

IoTOpen SourceSmart BuildingsSmart CitiesSmart Spaces

Impact Stories

Reshaping the future of Marinas with Smart Technologies

Blue EconomySmart CitiesSmart PortsSmart Tourism

Ecosystem

Wisdom Across the Board: Jose Benitez

Today we bring you a perspective from Jose Benitez, CEO, and Co-founder at Secmotic, a Spanish-based IoT company.

Ecosystem

Wisdom Across the Board: Ernö Kovacs

We sat together with Ernö Kovacs, the Co-chairman of FIWARE Foundation’s Smart industry Mission Support Committee (MSC).

Impact Stories

A platform to support the decision-making of public administrations

eGovSmart CitiesSustainability

Ecosystem

Wisdom Across the Board: Yasunori Mochizuki

This week, we bring you a fresh perspective from the other side of the world in the person of Yasunori Mochizuki, NEC Corporation.

Impact Stories

A platform to support and optimise Farmers’ work

Open SourceSmart AgriFoodSmart AgriTechSustainability

NEWS

FIWARE Foundation Announces Red Hat as Platinum Member

Red Hat’s membership lends further support to FIWARE as an Open Source standards driver.

Tech

Data Usage and Access Control in Industrial Data Spaces: Implementation ...

Explore the work that the team at UPM have been doing in the scope of Data Usage Control support with FIWARE.

Tech

FIWARE 7.8.2 Has Been Released

The FIWARE 7.8.2 patch release introduces additional security benefits to IoT Agents, such as MQTT-SSL support.

Impact Stories

How biosurveillance and Open Source technologies are jointly contributin...

eGoveHealthOpen DataOpen Source

Ecosystem

Wisdom Across the Board: Agustín Cárdenas Fernández

This week, we spoke to BoD Member Agustín Cárdenas Fernández, Director of Business Transformation, Telefónica Empresas.

Impact Stories

A platform facilitating citizens’ urban experience

Open DataSmart CitiesSmart EnergySmart Mobility

NEWS

The FIWARE-based WiseTown Named the Best Civic Tech Startup

The South Europe Startup Awards annually celebrate startups that have shown outstanding community and business achievements.

Impact Stories

Discover trends and predict anomalies in the shopfloor

AutomotiveIoTSmart FactorySmart IndustrySmart Manufacturing

Opportunities

Up Your FIWARE Game With Our Upcoming Event and #WednesdayWebinars

There are enough reasons to make the next two months the time to take your FIWARE know-how to the next level.

Ecosystem

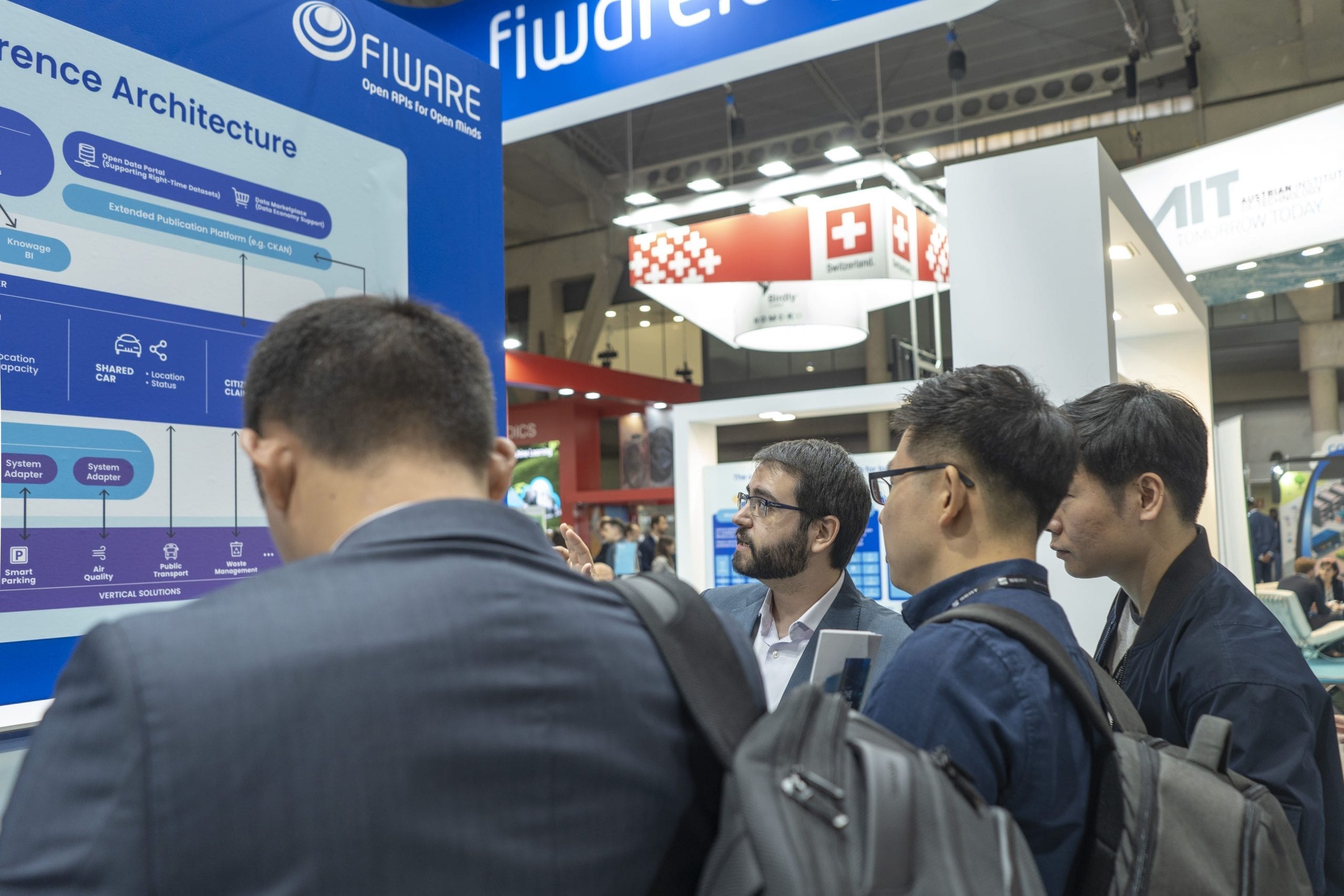

15 Memorable FIWARE Takeaways from SCEWC 2019

This year's edition of SCEWC was a huge success. Let's have a look at the 15 most memorable takeaways from this leading event.

Ecosystem

SCEWC 2019 Recap – Transforming Cities with Minimum Effort but Gre...

At SCEWC 2019 we showed the world what we – the FIWARE Community, are doing to help cities in their transformation journey.

Ecosystem

Meet FIWARE at Smart City Expo World Congress 2019!

Join FIWARE and a group of fantastic co-exhibitors at the world's leading event for Smart Cities: SCEWC 19.

Opportunities

Become a FIWARE Expert at the FIWARE Zone International FIWARE Bootcamp!

FIWARE Zone’s 1st International FIWARE Bootcamp delves deeper into all that FIWARE offers with hands-on training.

NEWS

Success Story FIWARE Foundation e.V. and WOBCOM GmbH

Together with its parent company, Stadtwerke Wolfsburg AG, WOBCOM GmbH plays an important role within the #WolfsburgDigital ini...

NEWS

Success Story FIWARE Foundation e.V. und WOBCOM GmbH

Gemeinsam mit ihrer Muttergesellschaft, der Stadtwerke Wolfsburg AG, spielt WOBCOM GmbH eine wichtige Rolle innerhalb der Initi...

NEWS

IOTA Collaborates with FIWARE to Build the Smart Solutions of the Future

IOTA Co-Founder Dominik Schiener is to speak on Distributed Ledger Technologies at the FIWARE 7th Global Summit.

Opportunities

Webinar Roundup – What is Happening This September?

This month marks the return of the Wednesday Webinars. Jason Fox is returning with 3 additional workshops and webinars.

Opportunities

From Robotics to Smart Cities: Five Open Calls to Help Bring Your Ideas ...

Want to challenge yourself? From Smart Manufacturing to Smart Cities: Bring your ideas to life with the following open calls.

Smart Cities

Innovation in Mid-sized Cities: Saint-Quentin Explores Ways to Optimize ...

Saint-Quentin is exploring the possibilities for mid-sized cities to create value and better services for their city and citizens.

NEWS

The FIWARE Foundation Welcomes 10 New Members On Board

Together with the Foundation they will help to industrialize the FIWARE open software framework for platforms of smart applicat...

Smart Cities

FIWARE and We4City Platform: Promoting Urban Intelligence on Top of Smar...

We4City allows cities to integrate data silos and produce the most important fuel for Smart Cities: Urban Intelligence.

Ecosystem

Fueloyal – Custom Connected Vehicle Platform

FIWARE-Powered American-based Fueloyal provides a 100% customized fleet and asset management platform.

Tech

FIWARE 7.6 Has Been Released

FIWARE 7.6 has been released. The recent 7.6 release also includes several new products within the catalogue.

Opportunities

Take Your Know-how to the Next Level With the FIWARE Workshop on March 28th

Learn about FIWARE, the FIWARE Catalogue, and the opportunities that FIWARE technology brings.

Opportunities

The Connecting Europe Facility (CEF) Programme – New Year, New Opp...

Meet CEF and the FIWARE Foundation at upcoming European events, apply for grants, and discover the 2019 CEF Work Programme.

Ecosystem

Join the Digital Building Blocks for Smart Cities Webinar and Become a #...

Join the “Digital Building Blocks for Smart Cities” webinar, become a #EUSmartCity and learn more about the CEF Building Blocks.

Ecosystem

Catching Up with WiTraC – The Total Wireless Tracking & Meter...

WiTraC has created a wireless RTLS based on IoT, smart sensors and actuators, by improving different wireless protocols.

Smart Cities

FIWARE And Save-a-Space Position Parking At The Centre Of Intelligent Mo...

Save-a-Space, a bespoke cloud parking management solution, enables drivers to find and book available parking spaces.

Tech

Stop By The CEF Building Blocks Knowledge Café at ICT Vienna

Learn more about the Connecting Europe Facility Building Blocks at the CEF Knowledge Café on Dec. 6th during ICT 2018 in Vienna.

Ecosystem

Join the CEF Workshop at the FIWARE Global Summit

Join the CEF Building Blocks Workshop at the FIWARE Global Summit in Málaga on November 28th from 15:15 to 17:00.

NEWS

FIWARE Foundation and Engineering Ingegneria Informatica SpA to Present ...

FIWARE Foundation and Engineering Group look forward to welcoming attendees to the FIWARE World at the CityCube, hall B – booth...

NEWS

FIWARE Foundation and TM Forum Launch Front-runner Smart Cities Program

11 cities from across the world have joined the Front-runner Smart Cities Program, with an open invitation to other cities.

NEWS

The FIWARE Community Takes the Stage in Málaga for the 5th FIWARE Summit

For two days, over 700 cities representatives, developers, thought leaders and entrepreneurs will gather at the FIWARE Global S...

NEWS

StoneOne Becomes a New (Gold) Partner of the FIWARE Foundation

[English Press Release] We are pleased to announce that StoneOne has joined the FIWARE Foundation as a Gold Member.

NEWS

StoneOne Wird Neuer (Gold-) Partner Der FIWARE Foundation

[German Press Release] We are pleased to announce that StoneOne has joined the FIWARE Foundation as a Gold Member.

Industry

IoTSWC ’18 – Meet FIWARE at the Leading IoT Industry Event

FIWARE will be returning to Barcelona to attend the IoT Solutions World Congress from October 16th to 18th.

Smart Cities

WiseTown – Situation Room Platform Used to Monitor the 4th of July...

This year the security of Independence Day, in the City of Independence, Oregon (USA), was monitored by digital technology.

Smart Cities

Meet Bettair®: The FIWARE-powered Solution on a Mission to Map Urban Air...

Bettair® was born for a single, simple reason: to help provide the solution to the world’s growing air quality problem.

Ecosystem

FrontierCities2 MAG2: New Grantees Tell Their Own Story

These new MAG2 startups are already well-versed in the FIWARE Community and they all have a long and engaging story to tell.

Ecosystem

Global Summit: FIWARE In Retrospect and in the Future

Valentijn de Leeuw, Vice President of ARC Advisory Group, reflects back on the FIWARE Global Summit 2018 in Porto, Portugal.

Agrifood

FIWARE: A Standard Way to Develop and Integrate Smart Farming Solutions

Join us at the Smart Agrifood Summit. The world's largest event on innovation and entrepreneurship in the Agrifood sector.

Opportunities

4 Solutions Using FIWARE Are In Phase 2 of SELECT for Cities

SELECT for Cities is based on the notion that cities across the globe are on a continuous search for new and innovative technol...

Agrifood

The Final Countdown – Here’s What Awaits You in Porto

The FIWARE Global Summit in the Smart City of Porto is one week away. Find out what awaits you during this two-day event.

Ecosystem

Transforming Your City into an Engine of Growth with FIWARE

Discover how FIWARE is driving the standards for Smart Cities during the Smart Cities Track at the FIWARE Global Summit.

Ecosystem

Explore Our Data-Driven Smart Industry Use Cases at Hannover Messe 2018

The data-driven Industry use cases based on the IDS reference architecture enabled by FIWARE.

Ecosystem

FIWARE Zone at Andalucía Digital Week

FIWARE iHub FIWARE Zone participated in Andalucía Digital Week in Sevilla on March 12th, 13th and 14th.

Industry

Join FIWARE and International Data Space Association at Hannover Messe 2018

Join FIWARE and IDSA from April 23-27 at Hannover Messe to discover our shared use cases.

NEWS

FIWARE at the Hannover Messe 2018

Together with IDSA, FIWARE presents its use cases at the Hannover Messe from 23 to 27 April 2018 in Hall 8 at Stand C31.

Agrifood

Agricolus Launches Agricolus Essential Free: A Complete and Free Version...

Agricolus Essential is a complete and free version of its "plug and play" interface for precision agriculture, up to 10 hectares.

NEWS

EdgeX Foundry Momentum Continues with California Release Preview and New...

California preview demonstrates significant performance improvements ahead of full code release in Summer 2018 .

Ecosystem

IDSA at the FIWARE Tech Summit

The IDSA and the Industria Conectada 4.0 Initiative, came together during the FIWARE Tech Summit in Málaga.

Tech

Learn How to Code, Hack, and Build Your Way To Success with FIWARE Tech

The year might slowly be coming to a close but we’ve got ONE more FANTASTIC event lined up for you. I am, of course, talking ab...

Smart Cities

Stay Up-To-Date with FIWARE Developments During SCEWC 2017

FIWARE will be present at this year’s Smart City Expo World Congress (SCEWC) to elaborate on our recent developments with...

Ecosystem

FIWARE In Mexico: A Reality

The FIWARE Community is getting more and more global everyday, but it is worth mentioning that Mexico is a pioneering region in...

NEWS

APINF CONTRIBUTES TOP CLASS API MANAGEMENT TECHNOLOGIES TO FIWARE PLATFORM

The FIWARE API Management Framework will incorporate significant contributions from the APInf platform in the next release of F...

Tech

APInf contributes top class API management technologies to FIWARE platform

The FIWARE API Management Framework will incorporate significant contributions from the APInf platform in the next release of F...

Smart Cities

Montevideo Smart Cities Meeting: Innovation True Stories

On August 2nd and 3rd, the Montevideo Smart Cities Meeting will take place in the Conference Center of the Montevideo...

Industry

Situm Indoor Positioning: highest accuracy, zero infrastructure

We are well accustomed to the GPS and map applications, that we use to optimize our routes when traveling from one city to anot...

Industry

Tera & Beeta: smart energy monitoring & efficiency

Although being a young company, launched in 2007, Tera is the project of experienced engineers with a background of more than 2...

Smart Cities

#MyFIWAREstory – WiseTown: city quality enhancer

Recent studies show that data generated in the world doubles every year: most of this information concerns our daily life and s...

Ecosystem

Tera Is Building Beeta using FIWARE to Solve the Fragmented IoT Home Ene...

Italian startup Tera is a knowledge-intensive SME that brings together years of expertise in data science and energy efficiency...

Agrifood

Foodko: Empowering Food Producers & Allowing Smart Logistics

Since food is a relatively low added value segment of the fast-moving consumer goods, food businesses are immensely sensitive t...

Industry

Stepla: Bringing the revolution in Livestock Farming

Livestock farmers may lose up to 10% of their cattle annually and currently they do not have the appropriate tool to avoid it h...

Ecosystem

FIWARE NGSI version 2 Release Candidate

The FIWARE NGSI version 2 API has reached the release candidate status. That means that we consider the current specification q...

Smart Cities

FIWARE at the Campus Party Europe 4th edition in Utrecht

From May 25th to 29th, Utrecht will be gathering a Nobel Prize winner, a cyber-war expert, a hyperloop dreamer, a technology st...

Opportunities

Glue & Blue: The mobile-orientated marketplace offering simple and ...

Each and every day, objects, devices and people are communicating more with other everyday things and we are continuously coord...

Opportunities

IoT & FIWARE along the revolution of Smart Digital Services

We live surrounded by smart technology. We can make “a robot” out of almost anything, and robots are also getting s...

Ecosystem

URBI: The solution to car sharing problems everywhere!

Shared transportation is not so much of a new trend, but one constantly renewing and revitalising its resources and what is has...

Ecosystem

FIWARE Tour Guide Application now ready!

One of the aspects we have been improving during the past few months is the availability of tutorial materials for FIWARE devel...

Tech

FIWARE awarded the best Smart Apps in Campus Party Mexico 2015

In July 2015, the presence of FIWARE in Campus Party Mexico 6 was a success thanks to, among other things, its wonderful stand,...

Industry

What makes a Smart App?

The following post is a collaboration by Benedikt Herudek, consultant at Accenture. We would like to thank him for his collabor...

Opportunities

Learn how to develop an app like Fonesense with FIWARE

Learn, thanks to some videos that we have published on our Youtube channel, how to develop with FIWARE and how to use some of o...

Agrifood

The battle over the “Internet of things”

The following post is an adaptation of the article written by Erich Möchel and published in FM4.ORF. We would like to than...

Industry

Build your own IoT platform with FIWARE enablers

Connecting things Connecting “objects” or “things” involves the need to overcome a set of problems arising in the different lay...

Smart Cities

FIWARE: A STANDARD OPEN PLATFORM FOR SMART CITIES

The following post has been written by Sergio García, architect of FIWARE's Context chapter. We would like to thank ...

NEWS

FIWARE Lab Training

The Budapest node of FI-PPP XIFI and EIT ICT Labs Budapest Associate Partner Group are pleased to announce the FIWARE Lab Train...

Ecosystem

FISPRINKLER: Winners of the FIWARE hackathon at Campus Party Brasil 2015

If there was something at Campus Party Brasil that drew the attention of everyone, that was the award of RS 20,000 that was to ...

NEWS

FIWARE Hackathon at Campus Party Brazil

Show your talent to the world developing an app based on some of the core components of the FIWARE platform. FIWARE is the open...

Ecosystem

Kurento received 2014 WebRTC Conference & Expo V Demo Award

The following post is an adaptation of a press release delivered by Kurento. We would like to thank them for their collaboratio...

Industry

Generic Enablers & eHealth Applications – The Shock Index

The following post has been written by the FI-STAR Team at their blog. We would like to thank their collaboration. If you...

Ecosystem

Keep your scanners peeled with FIWARE

This post tells the use of FIWARE made by the developers of BotCar/Wadjet, winners of the mention as Best IoT Solution in the F...

Agrifood

IMPACT Hackathon (23 September 2014)

On the 23rd of September, 60 people gathered in Madrid for the first hackathon held by IMPACT, and dedicated to mobile apps usi...

Opportunities

Connect your own “Internet of Things” to FIWARE Lab!

This post aims to provide IoT enthusiasts & makers with the basic information to connect any kind of devices to FIWARE Lab ...

Ecosystem

Attend our webinars!

On Monday (March 31) and Tuesday (April 1) we will have 7 webinars open to anyone who wants to participate. Thes...

Opportunities

‘Anybody can have a Smart Home’ by Antonio Sánchez (Open Ale...

Last October I was fortunate enough to attend the FI-WARE Smart City event held at the Palacio de la Magdalena in Santande...

Agrifood

‘How I connected FIWARE to a TupperBot’ by Angel Hernandez

Most people don't get it, but let's be honest, some of us love those endless sessions of programming and idea developme...

Smart Cities

FI-WARE opens Smartcities to Future Internet App Developers

Our recent exhibition in Dublin Future Internet Assembly (FIA, May 7-10th 2013) shows how FI-WARE enablers truly expose open AP...

NEWS

FIWARE iHubs Network Keeps Growing and Maturing With Five New Additions

The FIWARE iHubs Network welcomes five new iHubs from the U.S, Brazil, Morocco and Spain.

Tech

Big Changes to FogFlow – a Cloud-edge Computing Framework for IoT

FogFlow is a cloud-edge computing framework for IoT that orchestrates dynamic data processing flows over context data.

Impact Stories

Sustainable living app welcomes new cities onto digital platform

greenApes was founded in 2012 with the mission to reinforce individual incentives for sustainable living via digital solutions.

Ecosystem

Wisdom Across the Board: Arjen Hof

This week, we had the pleasure of sitting down with Arjen Hof, a member of the FIWARE Foundation Smart Cities MSC and Director ...

Ecosystem

9 Reasons Why You’ll Want to Attend the FIWARE Global Summit in Má...

Discuss how disruptive smart solutions will be created in the future and successful business can be developed around them.

Tech

GSMA launches IoT Big Data Directory and Framework, including FIWARE com...

The GSM Association represents the interests of mobile operators worldwide –nearly 800 operators with almost 300 companie...

Industry

FIWARE enables Seville IPv6 Smartcity Pilot

Last week, the specialized media and several technological blogs published a exciting new, It was an invita...

Opportunities

Agricolus: Making precision farming easier

In 2014, a disaster struck Europe, mainly affecting the region of Umbria in Italy. The olive pest fly caused the loss of up to ...

Opportunities

FIWARE at 4YFN Barcelona – Telefonica, Orange Engineering and Atos joini...

Since last Monday, FIWARE has been present at 4 Years From Now in Barcelona, an event that gathers the main actor of innovation...

Agrifood

Contribute to FIWARE Lab Ecosystem with your own IoT!

The following post was written by Carlos Ralli Ucendo. We would like to thank him for his helpfulness and his collaboration. FI...

Agrifood

Developing IPv6-enabled Apps with FIWARE

This post provides developers with an introduction to developing IPv6-aware and accessible Apps/Products with FIWARE & FIWA...