Kurento, FI-WARE's stream-oriented Generic Enabler, was chosen last month as one the most innovative WebRTC technologies in the world! Want to know more about Kurento? Read our guest post by Luis López Hernández, Kurento's Coordinator:

Humans don’t like bits. The friendlier an information representation format is for computers, the harder is for humans to manipulate it. If you try to read information represented in formats, such as XML, JSON you will feel your neurons crunching for a while. Our brains have been designed by evolution for processing audiovisual information efficiently, but not for understanding and managing complex data formats.

Perhaps for this reason multimedia services and technologies are pervasive in today’s Internet. People prefer viewing a video than reading an exhaustive document describing a concept or a situation. The FI-WARE platform could not ignore this and, for this reason, a specific Stream-oriented Generic Enabler has been created for dealing with multimedia information: Kurento.

Kurento is the Esperanto word for the English term “stream”. Hence, Kurento’s name is a declaration of intentions on its objectives, which can be summarized in two words: universality and openness. Universality means that Kurento is a “Swiss knife” for multimedia exposing pluggable capabilities that can be used with independence of the application or user scenario. Using Kurento you can create person-to-person services (e.g. video conferencing, etc.), person-to-machine services (e.g. video recording, video on demand, etc.) and machine-to-machine services (e.g. computerized video-surveillance, video-sensors, etc.).

Kurento provides support for most standard multimedia protocols and codecs including RTP, SRTP, RTSP, HTTP, H.264, VP8, AMR and many others. In addition, Kurento is compatible with latest WWW technologies including WebRTC and the HTML5 <video> tag.

Openness, on the other hand, denotes that Kurento has been designed as open source software basing on open standards. All pieces of the Kurento architecture have been released through the LGPL v2.1 license and have been made available at a public repository where anyone can freely access the code and the knowledge. This open vision is being reinforced through an open source software community which, in coordination with other FI-WARE instruments, is trying to support, enrich and promote the technology and vision of the project.

If you have ever developed a multimedia capable application you might have noticed that most frameworks offer limited capabilities such as media transcoding, recording or routing. Kurento's flexible programming models make possible to go beyond introducing features such as augmented reality, computer vision, blending and mixing. These kinds of capabilities might provide differentiation and added value to applications in many specific verticals including e-Health, e-Learning, security, entertainment, games, advertising or CRMs just to cite a few.

For example, we have used Kurento for blurring faces on videoconferences where participants want to maintain anonymous video interviews with doctors or other medical professionals. We also have used it for replacing backgrounds or adding costumes on a videoconference so that participants feel “inside” a virtual world in an advertisement. We can also detect and track user’s movements in front of their web-cam for creating interactive WebRTC (Kinect-like) games, or we can use it for reporting incidents (e.g. specific faces, violence, crowds) from security video streams.

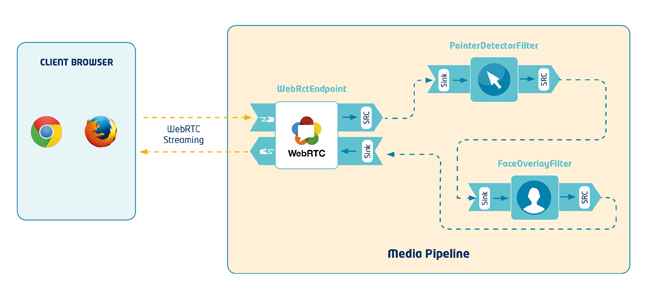

Developing applications with Kurento is quite simple, you can launch you own Kurento instance at the FI-LAB through recipes or through any of our pre-built images. After that, you just need to develop your application using a quite simple API (Application Programming Interface) that we call the Media API. The Media API is based on an abstraction called Media Elements. Each Media Element holds a specific media capability, whose details are fully hidden to application developers. Media elements are not restricted to a specific kind of processing or to a given fixed format or media codec. There are media elements capable of, for example, recording streams, mixing streams, applying computer vision to streams, augmenting or blending streams, etc. Of course, developers can also create and plug their very own media elements. From the application developer perspective, media elements are like Lego pieces: one just needs to take the elements needed for an application and connect them following the desired topology. In Kurento jargon, a graph of connected media elements is called a Media Pipeline.

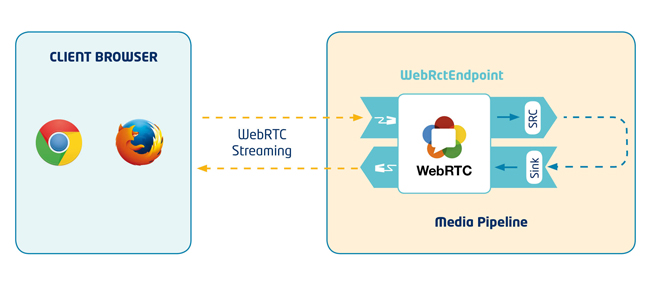

Figure 1. Architecture of the WebRTC loopback example. The media pipeline is composed of a single media element (WebRtcEndpoint), which receives media and sends it back to the client.

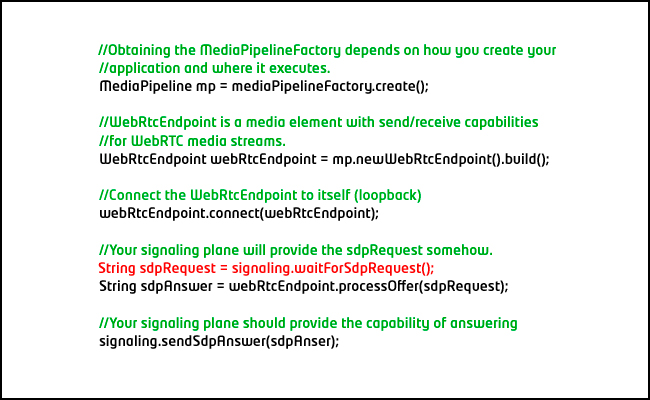

To get familiar with the Media API let’s create an example. One of the simplest multimedia applications we can imagine is a WebRTC loopback (i.e. an application where a browser sends a WebRTC stream to Kurento and the server gives it back to the client). The source code in Table 1 implements that functionality using the Java version of Media API. You can implement it in JavaScript (both for browser and Node.js) following the same principles:

Table 1. Source code for the WebRTC loopback example.

>Remark that, for simplicity, we have omitted most of the signaling code. For having a working example, you should add your very own signaling mechanism. At Kurento, we have implemented a very simple API providing basic signaling capabilities. This is what we call the Content API. You can take a look to Kurento developer’s guide if you want to have a clearer image about the Content API.

You can see below the links to a fully functional WebRTC loopback example based on Content API signaling

Full source code and video showing the result of executing the WebRTC loopback example<

Browser source code of the WebRTC loopback example:

https://github.com/Kurento/kurento-media-framework/blob/develop/kmf-samples/kmf-tutorial/src/main/webapp/webrtcLoopback.html

Java (Application Server) source code of the WebRTC loopback example:

https://github.com/Kurento/kurento-media-framework/blob/develop/kmf-samples/kmf-tutorial/src/main/java/com/kurento/tutorial/MyWebRtcLoopback.java

Video-clip showing the WebRTC loopback example working:

https://www.youtube.com/watch?v=HaVqO06uuNA

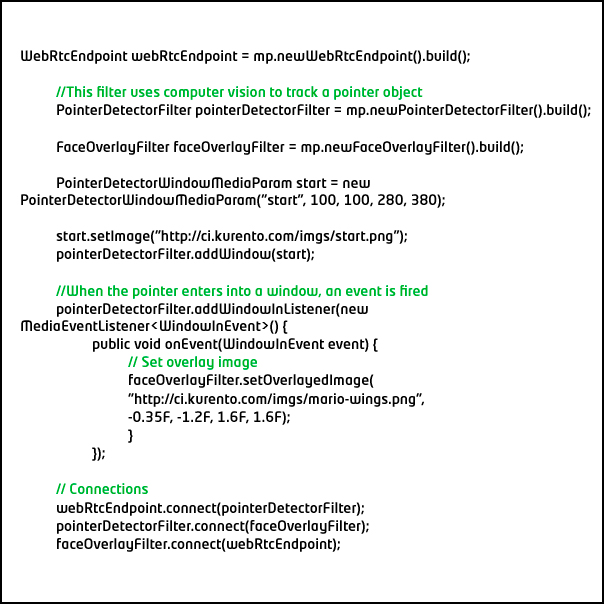

To make the example more interesting, let’s add some interactivity. Look at the code in Table 3 and try to figure out what it’s doing:

Table 3. Source code for the WebRTC + PointerDetectorFilter + FaceOverlayFilter example.

Full source code and video showing the result of executing the WebRTC + PointerDetectorFitler + FaceOverlayFilter:

Browser source code of the application WebRTC+PointerDetectorFilter+FaceOverlayFilter:

https://github.com/Kurento/kurento-media-framework/blob/develop/kmf-samples/kmf-tutorial/src/main/webapp/webrtcFilters.html

Handler source code of the application WebRTC+PointerDetectorFilter+FaceOverlayFilter:

https://github.com/Kurento/kurento-media-framework/blob/develop/kmf-samples/kmf-tutorial/src/main/java/com/kurento/tutorial/MyWebRtcWithFilters.java

Video-clip showing the application WebRTC+PointerDetectorFilter+FaceOverlayFilter:

https://www.youtube.com/watch?v=5eJRnwKxgbY

If you want to see more complex applications you can take a look to Kurento Github repository where we have made available the source code of services involving advanced features such as media processing chains, group communications, media interoperability, smart-city applications, etc.

Figure 2. Architecture of the WebRTC + PointerDetectorFilter + FaceOverlayFilter example

Luis Lopez Fernandez

Coordinator of FI-WARE Stream-oriented GE

Universidad Rey Juan Carlos